One of the most significant outcomes of increased processing power and adoption of the internet has been the explosion in ever growing volume of data. Available storage space has gone from minuscule kilobytes only a few decades ago to now having virtually unlimited cloud storage.

We now produce data almost as naturally as an increase in disorder predicted by the second law of thermodynamics.

Data creation, processing and storage now employs a large portion of our global workforce. Chances are that either your industry or even your role has shifted towards dealing with more data.

The title of the post is inspired by the “Be My Lover” song by La Bouche famous for its “la-da-di-da-dah” hook. Increase in data volume also drove further specialization into data engineering roles — many of those roles have titles like “DA, DI & DE”.

Let’s look at those data engineering related functions. The goal is not to list out clear boundaries between these functions as many overlap and differ between companies.

Database Administrator (DBA)

General Role: Managing and maintaining database systems to ensure their performance, availability, and security.

Skills: Database management systems (MySQL, PostgreSQL, Oracle), backup and recovery, performance tuning, and security.

Data (Pipeline) Engineer (DE)

General Role: Building and maintaining data pipelines, ensuring data is accessible, reliable, and ready for analysis.

Skills: SQL, Python/Scala, ETL (Extract, Transform, Load) processes, data warehousing, and knowledge of cloud platforms (AWS, GCP, Azure).

Machine Learning Engineer (MLE)

General Role: Developing and deploying machine learning models and integrating them into data pipelines. It’s important to understand how to efficiently process large volumes of data from analytical stores.

Skills: Machine learning frameworks (TensorFlow, PyTorch), programming (Python, R), data preprocessing, and model deployment.

Data Analytics/Insights Engineer (DA/DI)

General Role: Bridging the gap between data engineering and data analysis by transforming raw data into clean, usable datasets for analysis.

Skills: SQL, data transformation tools (dbt, Looker), business intelligence tools, and understanding of business metrics.

Many of these functions extend and overlap with different parts of the data pipeline chain. Like the recent Machine Learning functions have been filled by folks with more traditional data + software engineering background.

Rise of Big Data

The ability to process large volumes of data in order to derive signals created new product lines. Going from GigaBytes to TerraBytes to PetaBytes in just a few decades created a sense that existing systems would not be able to process this ever growing volume of data. Big Data technologies made a big entry into the market and most companies wanted to migrate their legacy systems over. Starting my career in a large financial firm, I saw this trend first hand.

In reality though, the actual data needed to gather meaningful insights and signals has remained relatively flat. Most use cases require access to recent data — customer orders generated today, new patient visits, sales per month. Days of the Big Data craze might be over.

Broad Applicability

It’s clear that Data is the fuel driving businesses around the world — from financial services to healthcare to military. Here are few use cases of industries using data to their benefit —

Healthcare:

Predictive Analytics: Predicting patient outcomes and disease outbreaks using historical health data.

Drug development: Looking at a large patient cohort to confirm drug or treatment treatments impact.

Personalized Medicine: Tailoring treatments to individual patients based on genetic data and medical history.

Operational Efficiency: Optimizing hospital operations, managing staff schedules, and reducing patient wait times.

Finance:

Fraud Detection: Identifying fraudulent transactions and unusual account activities using machine learning algorithms.

Risk Management: Assessing credit risk and investment risks through data analysis.

Algorithmic Trading: Using real-time market data to make automated trading decisions.

Public Sector:

Urban Planning: Using data to design smarter cities with better infrastructure and services.

Public Health: Monitoring and controlling disease outbreaks through data analysis and modeling.

Policy Making: Using data to inform policy decisions and measure their impact.

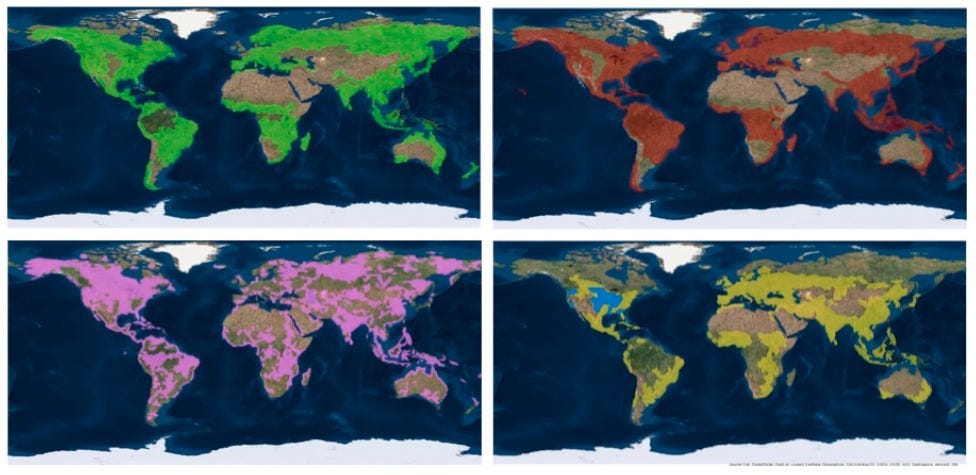

Climate Change:

Climate Modeling: Probably the only scenario where our probes are gathering data on another planet. From understanding why Venus is 900F and Mars is bone dry, we have come a long way in figuring out what events lead to their present conditions. Supplementing with data from Earth’s own history has helped us to build a reliable climate model.

Disaster Mitigation: Gathering more up to minute and accurate data on our planet can help us refine our predictions and make better public policy. Like the upcoming joint US & India NISAR mission.

So if you’re interested in a technical field, want to have a direct impact on business outcomes and be able to apply skill sets across industries, data engineering is a perfect career choice.

The new buzz in IT industry is Data management from its creation to increased processing power with enhanced storage space at Cloud Storage. It is achieved through Data administrators to manage the performance and security of Data, a highly specialised field. The Data utilisation in various fields like Health Care, Climate, Public sector and Disaster Management like US-India NISAR mission. Thus the data plays an important role in our daily lives,which needs to be on finger tips .

An interesting and absorbing read, keep penning down more 👏👍

Interesting 🫰