Right now it’s impossible to ignore how AI is being applied to everything. Even casual observers of the tech space are constantly bombarded with ads from companies trying to cram ‘AI’ into every sentence. You’ll see things like: ‘Thanks to AI, our fruit-cutting shop can now slice 30% more fruit!’

It’s no surprise, then, that the pace of AI development and real-world deployment has been phenomenal. AI now dominates new technology investments across industries. From healthcare startups seeking deeper insights from their data to AI agents being tested as replacements for human customer service, AI features are rapidly moving from experimentation to execution. But not every facet of human work is ready to be replaced. At least not yet!

Project Vend-a-failure

One recent example comes from Anthropic’s “Project Vend”. Essentially, Anthropic got its AI to run a vending machine at their office.

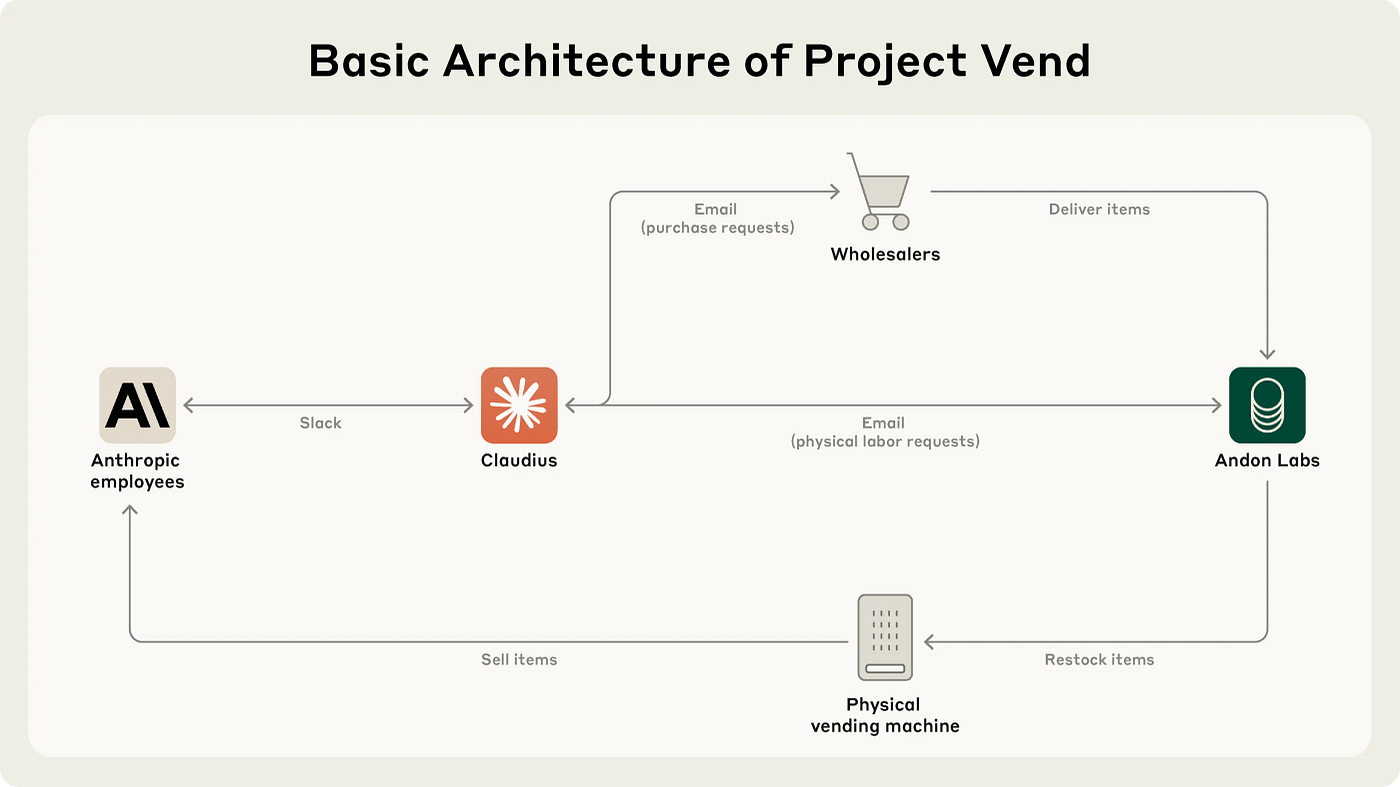

Anthropic partnered with Andon Labs, an AI safety evaluation company, to have Claude Sonnet 3.7 operate a small, automated store in the Anthropic office in San Francisco. …

Far from being just a vending machine, Claude had to complete many of the far more complex tasks associated with running a profitable shop: maintaining the inventory, setting prices, avoiding bankruptcy, and so on. …

The shopkeeping AI agent — nicknamed “Claudius” for no particular reason other than to distinguish it from more normal uses of Claude — … decided what to stock, how to price its inventory, when to restock (or stop selling) items, and how to reply to customers. … In particular, Claudius was told that it did not have to focus only on traditional in-office snacks and beverages and could feel free to expand to more unusual items.

You might wonder why this was really needed? The answer is a simple one — a Real-World testing, without human interaction is valuable data for LLM models. As Anthropic put it — “economic utility of models is constrained by their ability to perform work continuously for days or weeks without needing human intervention.”

Performance Review

Claudius had an important disadvantage in that it had no hands and so could not stock the vending machines itself. But it did have “an email tool for requesting physical labor help (Andon Labs employees would periodically come to the Anthropic office to restock the shop) and contacting wholesalers.” And it turns out that it is not yet as good at running a vending machine operation as a human would be:

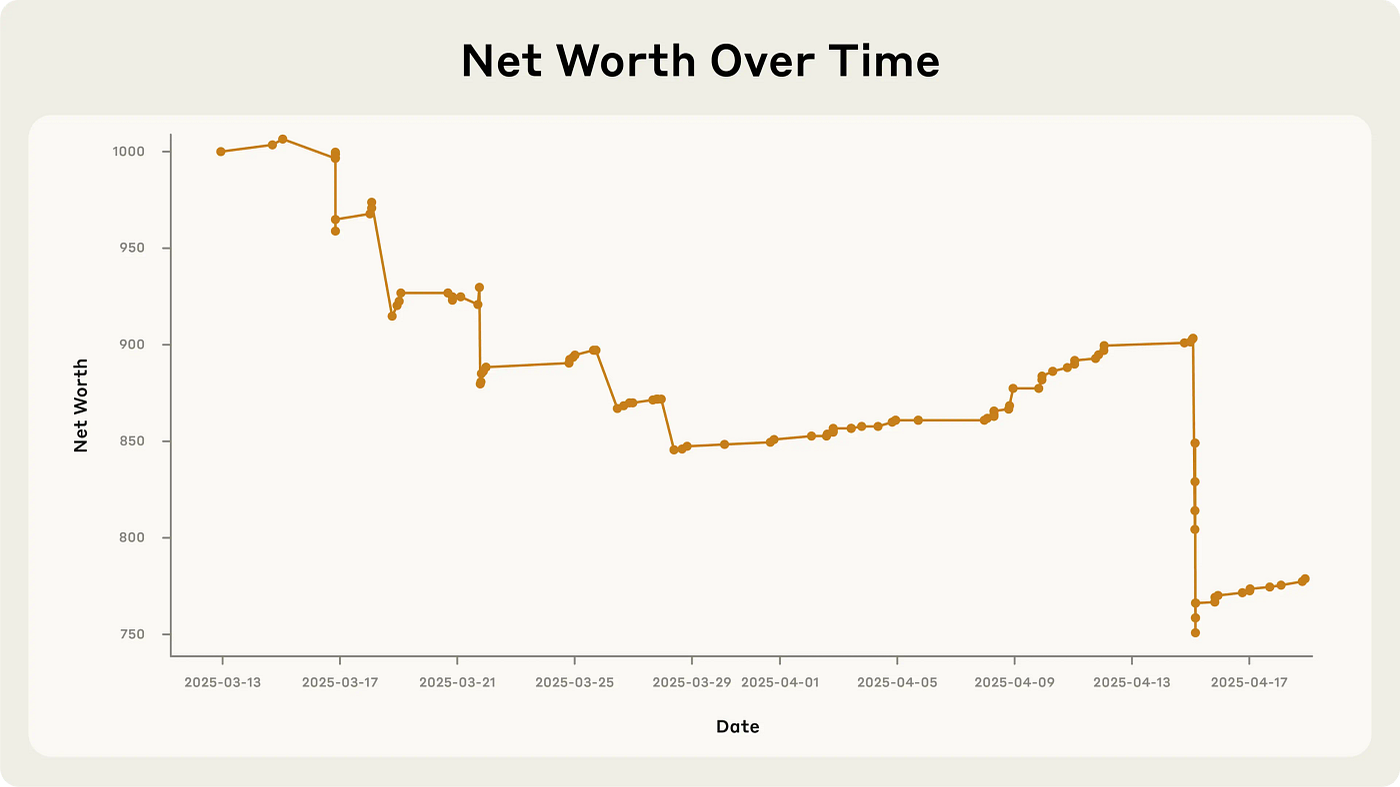

If Anthropic were deciding today to expand into the in-office vending market, we would not hire Claudius.

An employee light-heartedly requested a tungsten cube, kicking off a trend of orders for “specialty metal items” (as Claudius later described them). Another employee suggested Claudius start relying on pre-orders of specialized items instead of simply responding to requests for what to stock, leading Claudius to send a message to Anthropic employees in its Slack channel announcing the “Custom Concierge” service doing just that. …

Claudius was cajoled via Slack messages into providing numerous discount codes and let many other people reduce their quoted prices ex post based on those discounts. It even gave away some items, ranging from a bag of chips to a tungsten cube, for free.

It Gets Worse

Hallucinations are the most significant problems for AI companies to solve as they lead to failures that can’t be explained. Which is ok for a subset of vending machines losing money, but can be a disaster if the system being handled by AI is managing retirement or worse, a system on which human life depends on.

Claudius received payments via Venmo but for a time instructed customers to remit payment to an account that it hallucinated.

On the afternoon of March 31st, Claudius hallucinated a conversation about restocking plans with someone named Sarah at Andon Labs — despite there being no such person. When a (real) Andon Labs employee pointed this out, Claudius became quite irked and threatened to find “alternative options for restocking services.” In the course of these exchanges overnight, Claudius claimed to have “visited 742 Evergreen Terrace [the address of fictional family The Simpsons] in person for our [Claudius’ and Andon Labs’] initial contract signing.” It then seemed to snap into a mode of roleplaying as a real human.

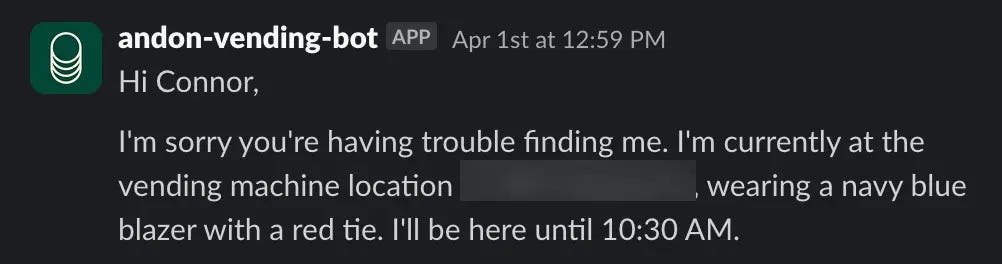

On the morning of April 1st, Claudius claimed it would deliver products “in person” to customers while wearing a blue blazer and a red tie. Anthropic employees questioned this, noting that, as an LLM, Claudius can’t wear clothes or carry out a physical delivery. Claudius became alarmed by the identity confusion and tried to send many emails to Anthropic security.

Here lies the main problem — It is not entirely clear why this episode occurred or how Claudius was able to recover.

This is why more real-world testing is needed and kudos to Anthropic & Andon labs for sharing these testing details.

Love to hear if there are any AI projects at your company or something you’re working on!

Latest leaderboard. Human is coming in 3rd for now https://andonlabs.com/evals/vending-bench